Configure NGINX

This guide describes how NGINX can be setup to forward requests to Splunk and act as a Reverse Proxy for enhanced security.

Endpoints for receiving data

Data can be send directly to the Splunk Endpoints.

We recommend for increased security that you setup HTTPs certificates. (Web agent also requires that valid HTTPs certificate is configured, because data is send directly from the users browser using HTTPs)

An Reverse Proxy is also recommended if data has to be received from outside the company network.

| Used for | Reverse Proxy endpoint | Splunk consumer script |

|---|---|---|

| Desktop/Endpoint agent data receiving | https://fqdn/data/pcagent Add request to RabbitMQ queue | bin\task_mq_consumer_pcagent.py reads from RabbitMQ queue. |

| Web agent data receiving | https://fqdn/data/browser Adds request to RabbitMQ queue Have to respond with following headers: Access-Control-Allow-Origin: * | bin\task_mq_consumer_web.py reads from RabbitMQ queue. |

| Optional: Splunk HTTP Event Collector | https://fqdn/services/collector | http://ip_or_fqn:8088/services/collector |

Configuring NGINX

sudo vi /etc/nginx/nginx.conf

# Add after SSL settings to increase HTTPs performance.

ssl_session_cache shared:SSL:10m;

ssl_session_timeout 10m;

sudo vi /etc/nginx/sites-available/site_fqdn

Edit /etc/nginx/sites-available/default or /etc/nginx/conf.d/default.conf depending on NGINX version and replace/add the content with the below:

# Disable access logging for /data/ receiving or setup rotating/deleting of log files, they can become very big.

map $request $loggable {

~*/data/* 0;

default 1;

}

server {

# Enable for HTTPs, remember to add certificates in bottom or generate them with certbot.

#listen 443 ssl default_server;

#listen [::]:443 ssl default_server;

listen 80 default_server;

server_name _;

server_tokens off;

# Disable access logging for /data/ receiving or setup rotating/deleting of log files, they can become very big.

access_log /var/log/nginx/access.log combined if=$loggable;

# set client body size to 1024 MB to allow Splunk POSTs to REST API and upload of apps.

client_max_body_size 1024M;

# Fix 414 Request-URI Too Large when splunk deep links into search from table.

client_header_buffer_size 64k;

large_client_header_buffers 4 64k;

# Reverse proxy splunk from port 8000 to HTTPs port 443.

# Note that NGINX and Splunk has to be running HTTPs as vel, or No cookies detected error could occur and login in Splunk fail.

location / {

proxy_pass_request_headers on;

proxy_set_header x-real-IP $remote_addr;

proxy_set_header x-forwarded-for $proxy_add_x_forwarded_for;

proxy_set_header host $host;

proxy_pass https://localhost:8000/;

}

location /nginx_status {

stub_status on;

access_log off;

#allow 127.0.0.1;

#deny all;

}

# Reverse proxy the Splunk REST API for Robot/Desktop Protobuf endpoint.

location /data/pcagent {

# Disable access logging or setup rotating/deleting of log files, they can become very big.

access_log off;

# Disable keepalive to free thread/socket for next user (to support 20.000 users per server)

keepalive_timeout 0;

include uwsgi_params;

uwsgi_pass unix:/run/uwsgi/wsgi-uxm.sock;

limit_except GET POST {

deny all;

}

}

# Reverse proxy the Splunk REST API for Web data endpoint.

location /data/browser {

# Disable access logging or setup rotating/deleting of log files, they can become very big.

access_log off;

# Disable keepalive to free thread/socket for next user (to support 20.000 users per server)

keepalive_timeout 0;

include uwsgi_params;

uwsgi_param HTTP_X-Forwarded-For $proxy_add_x_forwarded_for;

add_header 'Access-Control-Allow-Origin' '*';

add_header 'Access-Control-Allow-Methods' 'GET, POST, OPTIONS';

add_header 'Access-Control-Allow-Headers' 'origin, content-type, accept, LoginRequestCorrelationId, content-encoding';

add_header 'Content-Type' 'text/plain';

uwsgi_pass unix:/run/uwsgi/wsgi-uxm.sock;

limit_except GET POST OPTIONS {

deny all;

}

}

# Reverse proxy for Splunk HTTP Event Collector if needed for API to pull data.

#location /services/collector {

# # Disable access logging or setup rotating/deleting of log files, they can become very big.

# access_log off;

# proxy_pass_request_headers on;

# proxy_set_header x-forwarded-for $proxy_add_x_forwarded_for;

# proxy_set_header host $host;

# proxy_pass https://localhost:8088/services/collector;

# limit_except POST {

# deny all;

# }

#}

# SSL Certificates if Lets Encrypt isn't used.

#ssl_certificate /mnt/disks/data/certs/fqdn.crt;

#ssl_certificate_key /mnt/disks/data/certs/fqdn.key;

#include /etc/letsencrypt/options-ssl-nginx.conf; # managed by Certbot using own TLS/Ciphers

#ssl_dhparam /etc/letsencrypt/ssl-dhparams.pem; # managed by Certbot

# See https://gist.github.com/gavinhungry/7a67174c18085f4a23eb

ssl_protocols TLSv1.3 TLSv1.2;

ssl_prefer_server_ciphers on;

ssl_ecdh_curve secp521r1:secp384r1;

ssl_ciphers TLS_AES_128_GCM_SHA256:TLS_AES_256_GCM_SHA384:TLS_CHACHA20_POLY1305_SHA256:ECDHE-ECDSA-AES128-GCM-SHA256:ECDHE-RSA-AES128-GCM-SHA256:ECDHE-ECDSA-AES256-GCM-SHA384:ECDHE-RSA-AES256-GCM-SHA384:ECDHE-ECDSA-CHACHA20-POLY1305:ECDHE-RSA-CHACHA20-POLY1305:DHE-RSA-AES128-GCM-SHA256:DHE-RSA-AES256-GCM-SHA384;

ssl_session_cache shared:TLS:2m;

ssl_buffer_size 4k;

# OCSP stapling

ssl_stapling on;

ssl_stapling_verify on;

resolver 1.1.1.1 1.0.0.1 [2606:4700:4700::1111] [2606:4700:4700::1001]; # Cloudflare

# Set HSTS to 2 year

add_header Strict-Transport-Security 'max-age=63072000; includeSubDomains; preload' always;

}

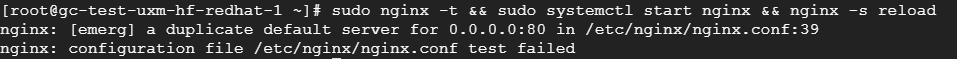

Afterwards reload nginx config

sudo nginx -t && sudo systemctl start nginx && sudo nginx -s reload

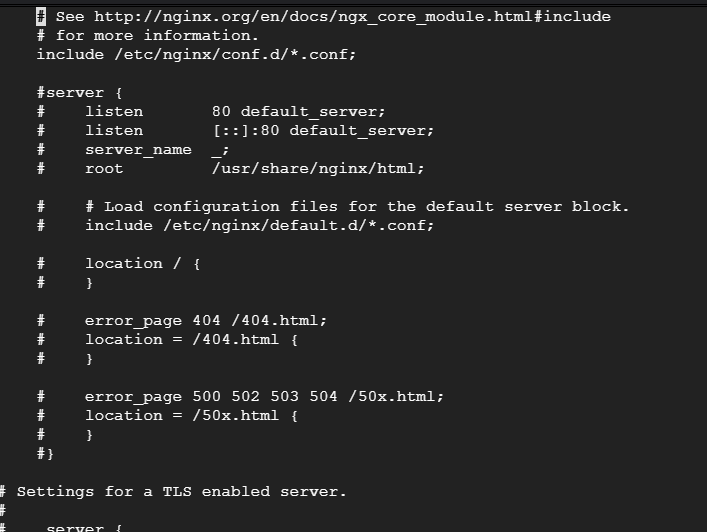

Delete or comment out default website in /etc/nginx/nginx.conf if NGINX fails to start due to duplicate default server and rerun nginx reload.

Errors can be viewed in logfiles via:

sudo tail -n 100 -f /var/log/nginx/*

NGINX can be tested by visiting FQDN/data/browser or IP/data/browser, UXM will output "no get/post data received" if NGINX/uWSGI works correctly.

HTTPs certificate

Lets Encrypt setup

Let's Encrypt can auto generate HTTPs certificates if the domain is public available, the FQDN has to be accessible from the internet or Lets Encrypt will fail.

It generates certificates that lasts for 90 days and auto renews them every 30 days via job on the server.

See https://certbot.eff.org/instructions?ws=nginx&os=ubuntufocal for how to setup Certbot

sudo certbot --nginx -d fqdn --email name@domain.com --agree-tos (Use support@uxmapp.com for UXM SaaS servers)

certbot will ask if HTTP traffic should be redirected to HTTPs, you can choose to let it do it (Option 2).

You can view the certificates with

certbot certificates