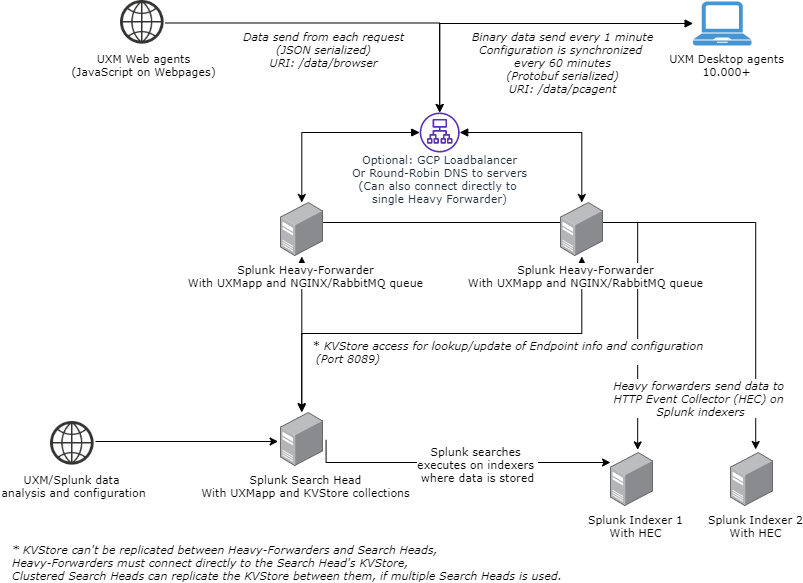

Distributed Splunk Environment

Follow the steps below to install the UXM monitoring solution On-Premise, the software can be downloaded from here: Download server software. (Splunk app downloads will be moved to Splunkbase in 2026)

UXM is setup to handle 10,000+ Desktop agents and million of Web page requests per day.

The recommended architecture is to setup an Splunk Heavy-Forwarder with UXM (containing the NGINX/RabbitMQ queue) and send data via HTTP Event Collector (HEC) to the indexers.

Setup Splunk Indexers

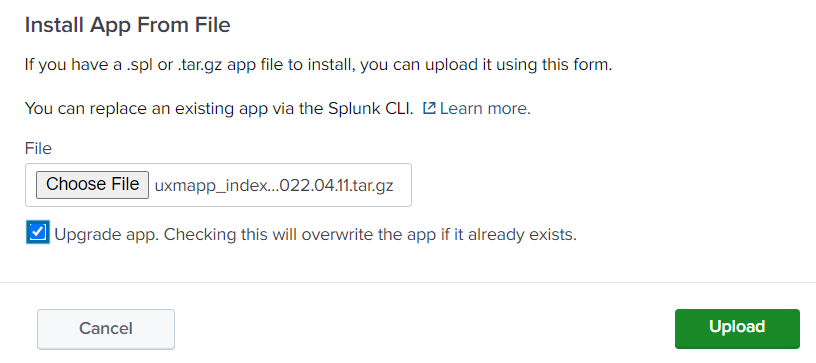

Install Indexer App

Install the app "uxmapp_indexer_YYYY.MM.DD.tar.gz" on the Splunk Indexers.

Installing the indexer app creates the following UXM indexes:

| Index name | Type | Description |

|---|---|---|

| uxmapp_response | Events | |

| uxmapp_sessiondata | Events | |

| uxmapp_metrics | Metrics | Metric store with high performance metrics for charts with limited dimentions. |

| uxmapp_metrics_rollup | Metrics | Hourly rollup of Metric for long term reporting and fastest performance. |

| uxmapp_confidential | Events | Confidential data that only a limited number of people can access and view. |

| uxmapp_si_hourly | Events | For hourly summary index rollups. |

| uxmapp_si_quarterly | Events | For quarterly summary index rollups. |

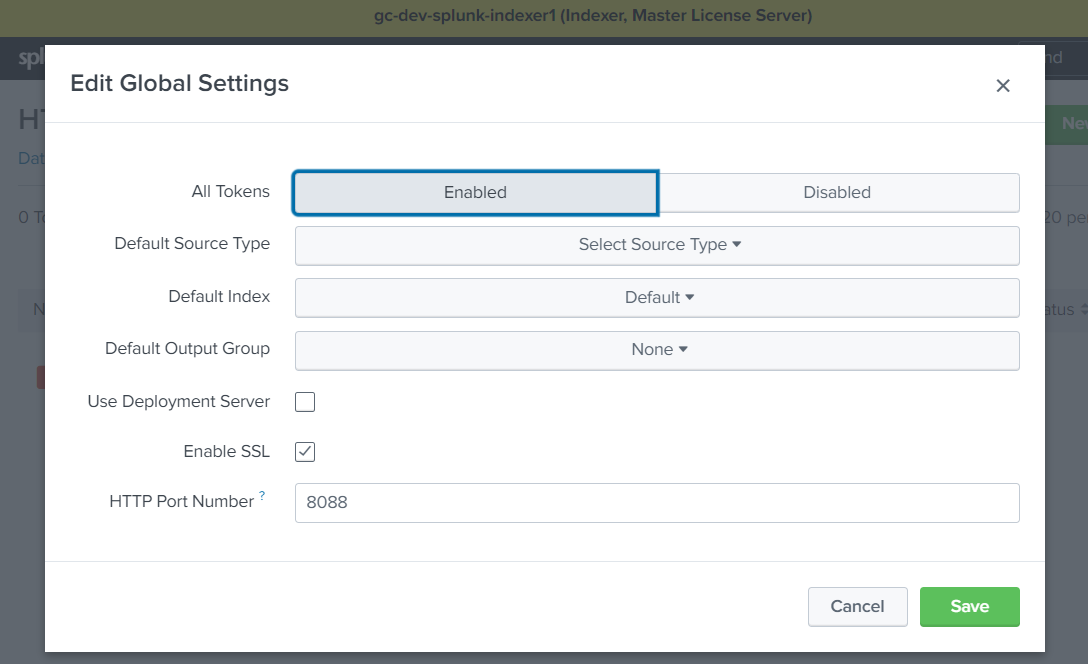

Activate HTTP Event Collector

Activate the HTTP Event Collector (HEC) on the indexers that should receive the UXM data.

This is done under Settings -> Data Inputs -> HTTP Event Collector -> Global Settings

Write down the FQDN/IP of the Indexer, if SSL is enabled and Port number (Default 8088), these settings will be used later when setting up the Heavy Forwarder.

Create HTTP Event Collector for Receiving UXM Data

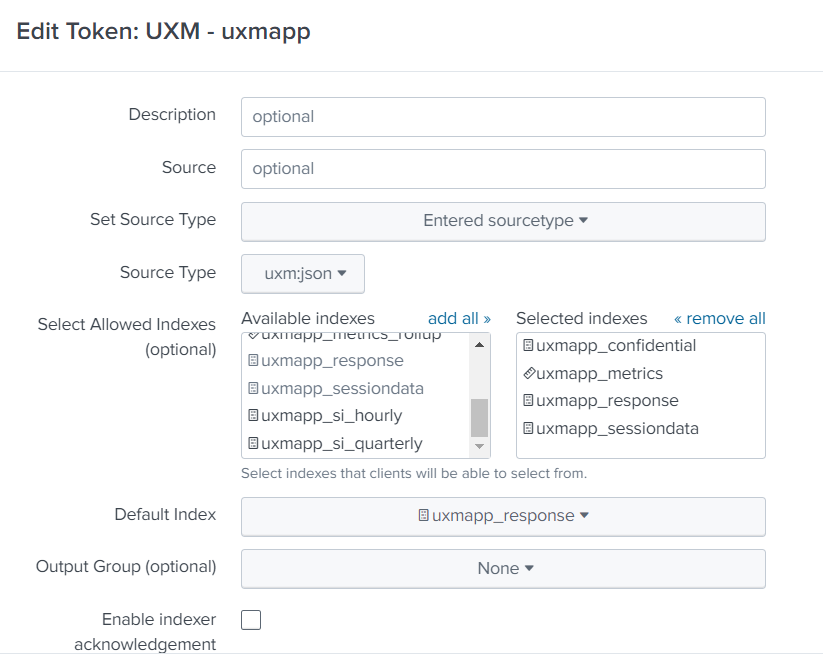

Create new HTTP Event Collector and call it "UXM - uxmapp", indexer acknowlegement has to be disabled.

Select:

- Source Type: Automatic.

- App Context: UXM Indexers (uxmapp_indexer).

- Indexes: Select the 4 indexes uxmapp_confidential, uxmapp_metrics, uxmapp_response, uxmapp_sessiondata.

- Default Index: uxmapp_response.

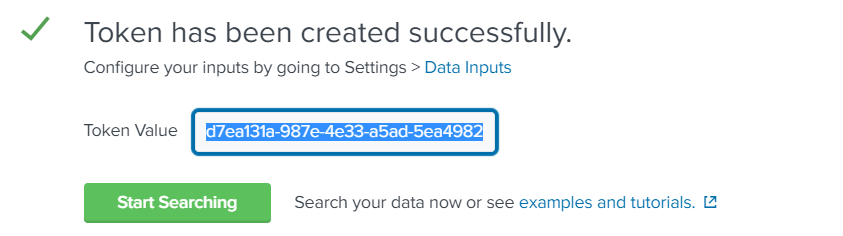

Press Preview and Submit, write down the token value, the settings will be used when configuring the Heavy Forwarder and Search Head.

Setup Splunk Search Head

The splunk search head contains dashboards and data models and is where the user analyses the UXM data.

Please note that multiple scheduled searches which creates summary indexes are created by the UXM app, these requires that you follow Splunk best practices and forwards all data from the Search Heads to the Indexers.

Install App

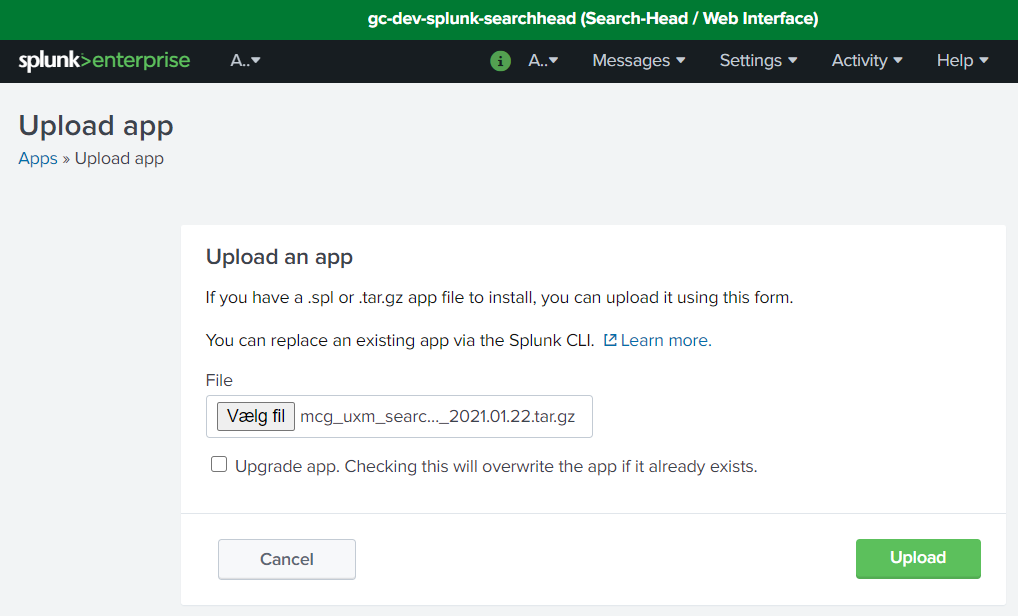

Install the following apps on the Search Head. You can skip the restart untill later.

- Search Head app: uxmapp_searchhead_YYYY.MM.DD.tar.gz

- Custom visualization: uxmapp_waterfall_YYYY.MM.DD.tar.gz

- Custom visualization: uxmapp_worldmap_YYYY.MM.DD.tar.gz

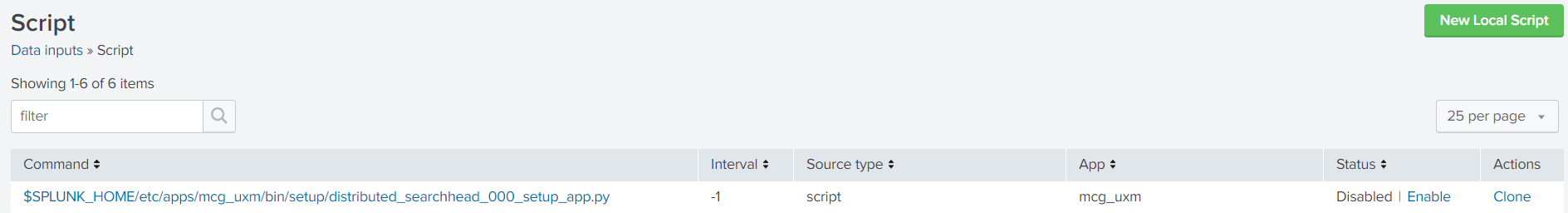

Go to Settings -> Data Inputs -> Scripts and enable the script setup/distributed_searchhead_000_setup_app.py. (The script creates default KVStores entries, Splunk roles and Splunk user that allows Heavy Forwarders to access the KVStore on the Search Head), it will auto disable when done.

You can also follow this guide to "Setup Search Head Manually" if you prefer to configure Splunk manually.

You can view the output of the script by running the following Splunk search:

index="_internal" source="*_setup_distributed_searchhead_000_setup_app.log"

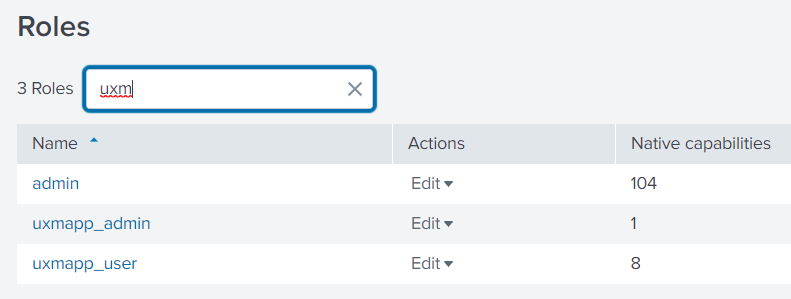

Verify Roles

There will be 2 new roles after the script has executed called: uxmapp_user and uxmapp_admin:

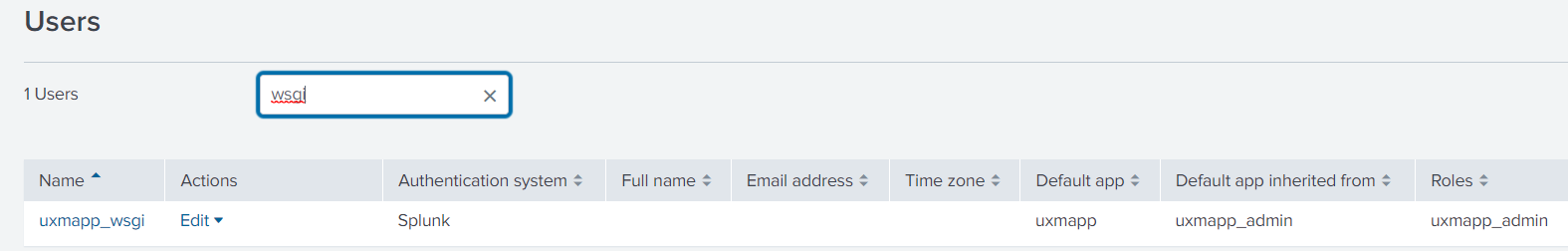

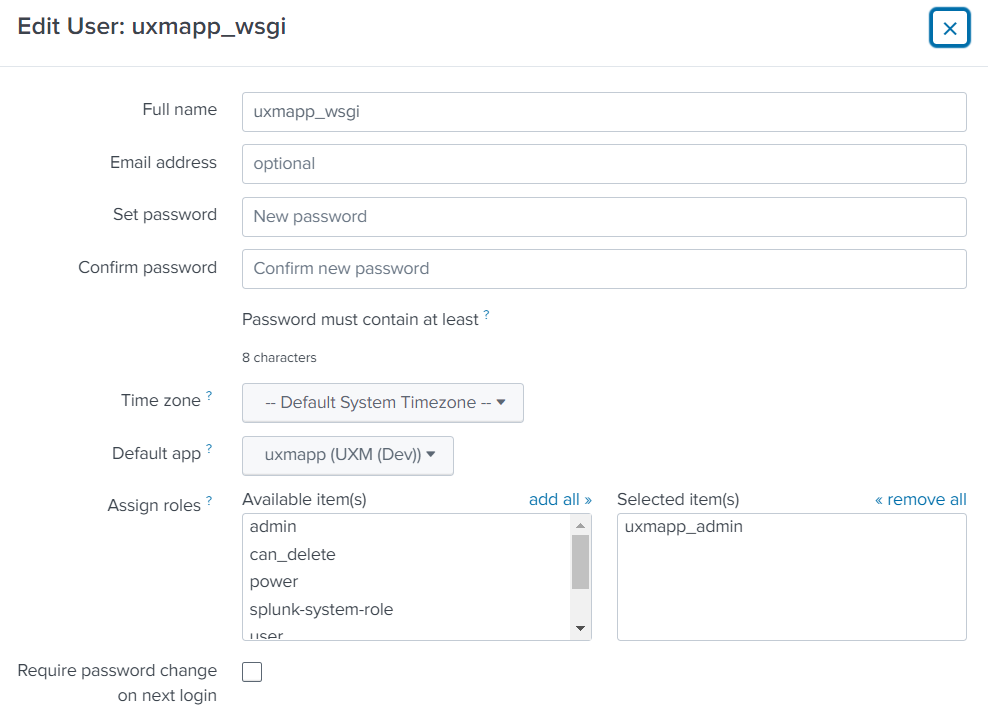

And a user called uxmapp_wsgi, reset the password for the user and disable that password change is required on next login, store the password it will be used later when setting up the Heavy Forwarder.

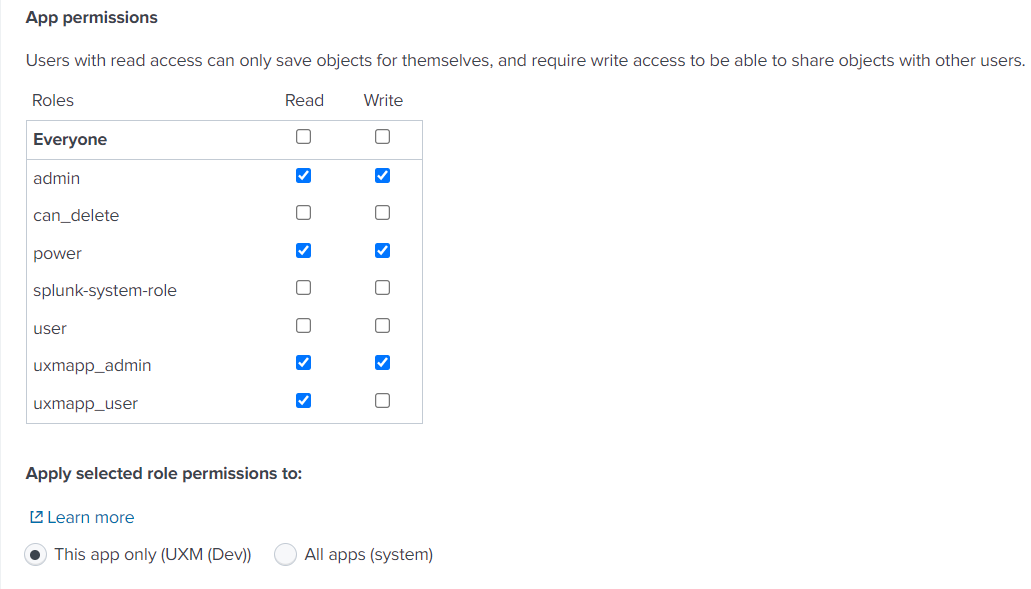

Setup/Verify Permissions for App

Go to Apps -> Manage Apps and click permissions on the uxmapp app.

Add read permissions for the newly created uxmapp_user and read+write permissions for the uxmapp_admin user.

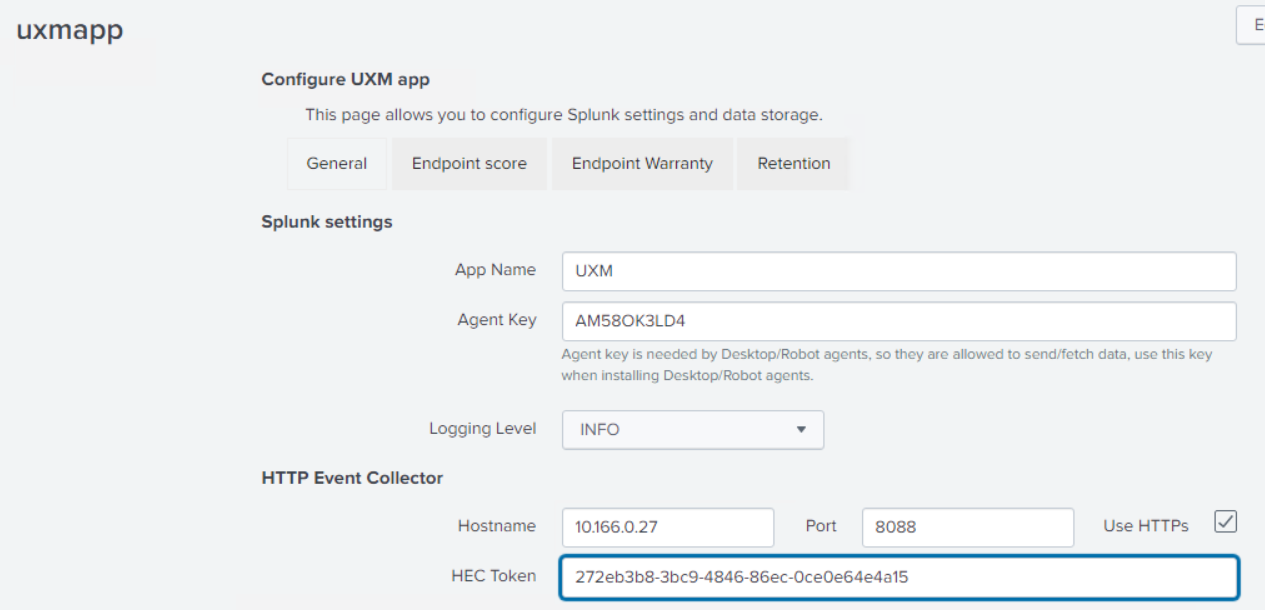

Setup/Verify UXM Configuration

Open the UXM app, it will ask you to configure it, enter HTTP Event Collector Hostname and Token.

Apply the license, leave rest of values as default and press save.

Enable Splunk Batch Processing Scripts

Enable following Data Input script under Settings -> Data Input -> Scripts:

- check_license.py

- daily_maintenance.py

- task_generate_tags.py

- update_alert_event_summaries.py

- update_applications.py

- update_endpoint_groups.py

- update_kvstores.py

The Splunk Search Head needs to be restarted afterwards when all configuration is done.

Setup Heavy Forwarder

The Splunk Heavy Forwarder (HF) receives the data and processes it according to the configuration on the Splunk Search Head KVStores. It also respond with configuration to the UXM Desktop agents when they synchronize hourly.

NGINX (Linux) or IIS (Windows) and RabbitMQ is needed to control the data retrieval and queuing to avoid overloading the HF or Splunk environment, because receiving data from Desktop endpoint and public websites requires a high number of TCP connections.

Setup RabbitMQ

Login to the heavy forwarder and install RabbitMQ following the official guides:

- Ubuntu

- Red Hat

- Windows

Install newest version of RabbitMQ and Erlang following guide https://www.rabbitmq.com/install-debian.html

We recommend locking the package, all features have to be enabled before upgrading when performing major upgrades from 3.11.x to 3.12.x, etc, which breaks rabbitmq-server when upgrading.

sudo rabbitmqctl enable_feature_flag all

sudo apt-mark hold rabbitmq-server

After installation enable the management web interface and add the UXM user.

Remember to generate a password and replace it with the "{GeneratedPassword}".

sudo rabbitmq-plugins enable rabbitmq_management

sudo service rabbitmq-server start

sudo rabbitmqctl add_user uxmapp {GeneratedPassword}

sudo rabbitmqctl set_user_tags uxmapp monitoring

sudo rabbitmqctl add_vhost /uxmapp/

sudo rabbitmqctl set_permissions -p /uxmapp/ uxmapp ".*" ".*" ".*"

sudo rabbitmqctl delete_user guest

sudo apt-mark hold rabbitmq-server

Install newest version of RabbitMQ and Erlang following guide https://www.rabbitmq.com/install-rpm.html

RedHat 7 comes with old RabbitMQ 3.3.5 which UXM supports, this can be installed with:

sudo yum install erlang rabbitmq-server

After installation enable the management web interface and add the UXM user.

Remember to generate a password and replace it with the "{GeneratedPassword}".

sudo rabbitmq-plugins enable rabbitmq_management

sudo service rabbitmq-server start

sudo rabbitmqctl add_user uxmapp {GeneratedPassword}

sudo rabbitmqctl set_user_tags uxmapp monitoring

sudo rabbitmqctl add_vhost /uxmapp/

sudo rabbitmqctl set_permissions -p /uxmapp/ uxmapp ".*" ".*" ".*"

sudo rabbitmqctl delete_user guest

sudo apt-mark hold rabbitmq-server

Install newest version of RabbitMQ and Erlang following guide https://www.rabbitmq.com/install-windows.html

Open elevated command prompt as administrator and run commands below to configure new virtual host and user for UXM:

Remember to generate a password and replace it with the "{GeneratedPassword}".

cd "C:\Program Files\RabbitMQ Server\rabbitmq_server-4.1.6\sbin"

rabbitmq-plugins enable rabbitmq_management

rabbitmqctl add_user uxmapp GeneratedRabbitMQPassword

rabbitmqctl set_user_tags uxmapp monitoring

rabbitmqctl add_vhost /uxmapp/

rabbitmqctl set_permissions -p /uxmapp/ uxmapp ".*" ".*" ".*"

rabbitmqctl delete_user guest

Install App

Install the app "uxmapp_heavyforwarder_YYYY.MM.DD.tar.gz" on the Splunk Heavy Forwarder. You can skip the restart untill later.

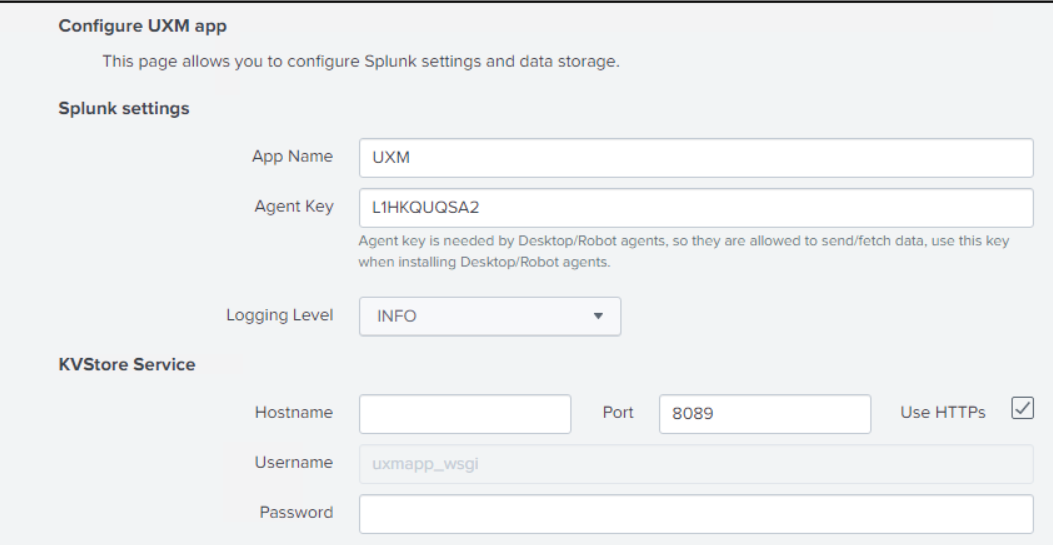

Configure App

Open the UXM app, it will ask you to configure it, use same Agent Key as the Search Head, enter KVStore, HTTP Event Collector and RabbitMQ settings, leave rest of values as default and press save.

Storage path is for UXM Desktop agent log files and UXM Robot agent video, screenshot and log results, can be skipped.

Save the generated Agent Key for later when deploying the UXM Desktop agent to endpoints. See Deploying Desktop Agents

Save and restart Splunk.

Setup uWSGI/Python

- Ubuntu

- Red Hat

- Windows

Install Python3 and activate the uWSGI environment by running the following commands

sudo apt-get update

sudo apt-get -y install python3-pip python3-virtualenv

Setup uWSGI environment using non root user:

cd SPLUNK_HOME/etc/apps/uxmapp/bin/wsgi/

sudo mkdir -p /var/log/uwsgi/ && sudo chown -R splunk:splunk /var/log/uwsgi/

sudo virtualenv -p python3 ../wsgi/ && chown -R splunk:splunk ../wsgi/

source ../wsgi/bin/activate

pip install uwsgi

deactivate

Add WSGI data receiver as service that starts with the server

echo "Auto-start uWSGI on boot"

sudo cp ../wsgi/wsgi-uxm.template.service /etc/systemd/system/wsgi-uxm.service

# Check that uxmapp folder is correct in params: WorkingDirectory, Environment and ExecStart

# sudo vi /etc/systemd/system/wsgi-uxm.service

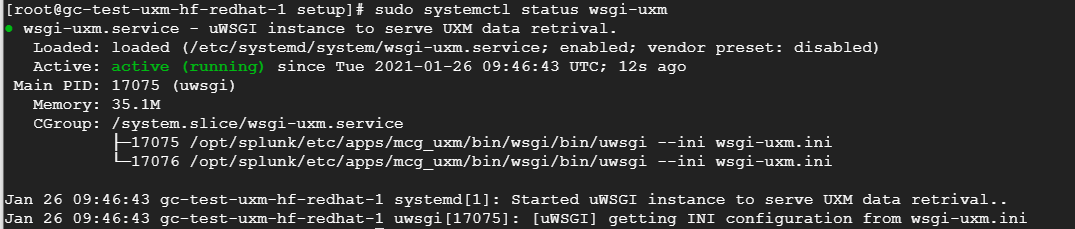

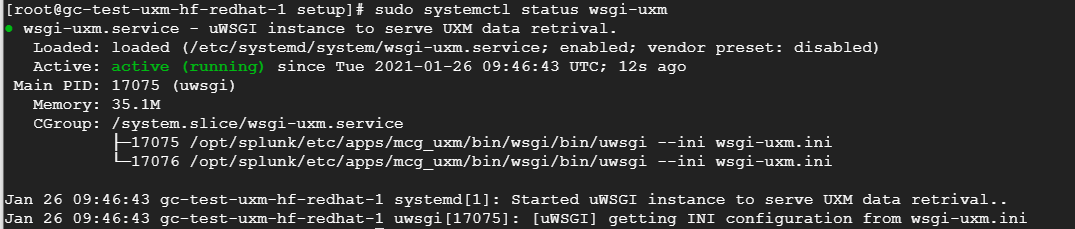

sudo systemctl enable wsgi-uxm && sudo systemctl restart wsgi-uxm

sudo systemctl status wsgi-uxm

After commands is executed systemctl status wsgi-uxm should show the following output:

Install Python3 and activate the uWSGI environment by running the following commands

sudo yum install python3 python3-pip python3-virtualenv python3-devel gcc

Setup uWSGI environment using non root user:

cd SPLUNK_HOME/etc/apps/uxmapp/bin/wsgi/

sudo mkdir -p /var/log/uwsgi/ && sudo chown -R splunk:splunk /var/log/uwsgi/

sudo virtualenv -p python3 ../wsgi/ && chown -R splunk:splunk ../wsgi/

source ../wsgi/bin/activate

pip install uwsgi

deactivate

Add WSGI data receiver as service that starts with the server

echo "Auto-start uWSGI on boot"

sudo cp ../wsgi/wsgi-uxm.template.service /etc/systemd/system/wsgi-uxm.service

# Check that uxmapp folder is correct in params: WorkingDirectory, Environment and ExecStart

# sudo vi /etc/systemd/system/wsgi-uxm.service

sudo systemctl enable wsgi-uxm && sudo systemctl restart wsgi-uxm

sudo systemctl status wsgi-uxm

After commands is executed systemctl status wsgi-uxm should show the following output:

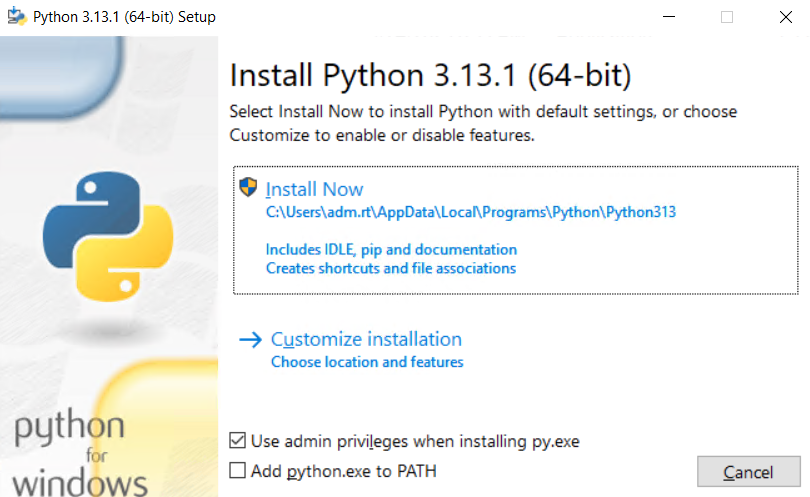

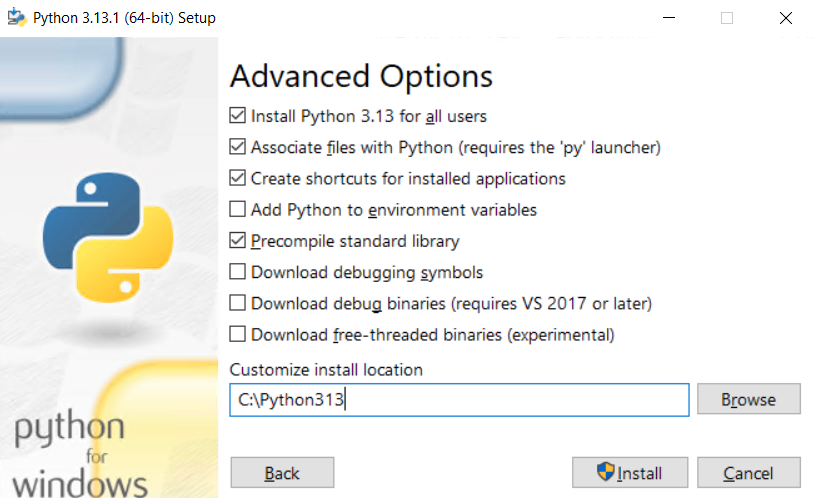

Install newest python 3.13.x from https://www.python.org/downloads/windows/.

Check "Use admin privileges ..." and select "Customize installation".

Under Advanced Options select "Install for all users" and install under "C:\Python313". (Avoid long paths or whitespaces in the path)

"C:\Program Files\Splunk\etc\apps\uxmapp\bin\wsgi\web.config" will have to be modifed if using other path than "C:\Python313".

Open eleveated command prompt as administrator and execute:

"C:\Python313\Scripts\pip.exe" install wfastcgi

Output:

Collecting wfastcgi

Using cached wfastcgi-3.0.0.tar.gz (14 kB)

Using legacy 'setup.py install' for wfastcgi, since package 'wheel' is not installed.

Installing collected packages: wfastcgi

Running setup.py install for wfastcgi ... done

Successfully installed wfastcgi-3.0.0

Setup NGINX (Linux) or IIS (Windows)

NGINX/IIS is used to create web front for uWSGI data receiver.

We recommend for increased security that you setup HTTPs certificates or use an Reverse Proxy if data has to be received from outside the company network.

UXM Web agent and UXM Browser extensions requires that valid HTTPs certificate is configured, because data is send directly from the users browser using the same HTTP/HTTPs security that monitored website has. (UXM Desktop Agents after version 2025.01.30 can send browser data through UXM Desktop agent)

| Used for | Reverse Proxy endpoint | Splunk consumer script |

|---|---|---|

| Desktop/Endpoint agent data receiving | https://fqdn/data/pcagent Add request to RabbitMQ queue | bin\task_mq_consumer_pcagent.py reads from RabbitMQ queue. |

| Web agent data receiving | https://fqdn/data/browser Adds request to RabbitMQ queue Have to respond with following headers: Access-Control-Allow-Origin: * | bin\task_mq_consumer_web.py reads from RabbitMQ queue. |

- Ubuntu

- Red Hat

- Windows

sudo apt-get install nginx-light

See Configure NGINX for setting up NGINX

See Setting up and configuring NGINX for info on allowing through Firewall and SELinux.

sudo yum install nginx

sudo usermod -a -G nginx splunk

# Add selinux dir

mkdir /etc/nginx/selinux

# Allow through firewall

sudo firewall-cmd --permanent --add-port={80/tcp,443/tcp}

sudo firewall-cmd --reload

sock_file is required in SELinux Enforcing mode to support NGINX/wsgi, write following SELinux module to /etc/nginx/selinux/nginx.te

module nginx 1.0;

require {

type httpd_t;

type var_run_t;

class sock_file write;

}

#============= httpd_t ==============

allow httpd_t var_run_t:sock_file write;

Compile and import SELinux module

sudo yum -y install checkpolicy

checkmodule -M -m -o /etc/nginx/selinux/nginx.mod /etc/nginx/selinux/nginx.te

semodule_package -o /etc/nginx/selinux/nginx.pp -m /etc/nginx/selinux/nginx.mod

semodule -i /etc/nginx/selinux/nginx.pp

See Configure NGINX for setting up NGINX

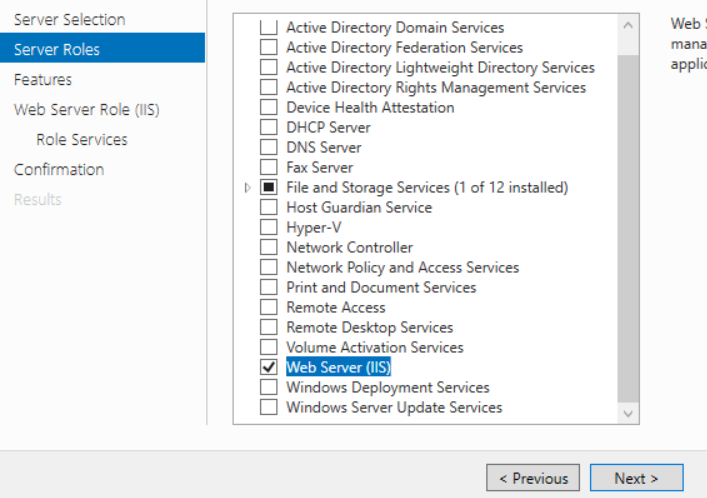

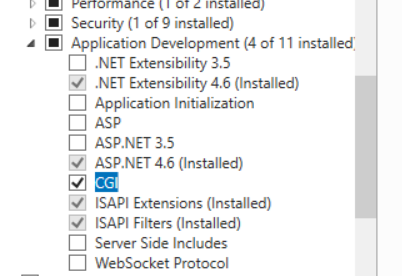

Prerequisites: Install IIS role, this can be done from the Server Manager by clicking "Add roles and features"

Select Web Server (IIS) under Server Roles:

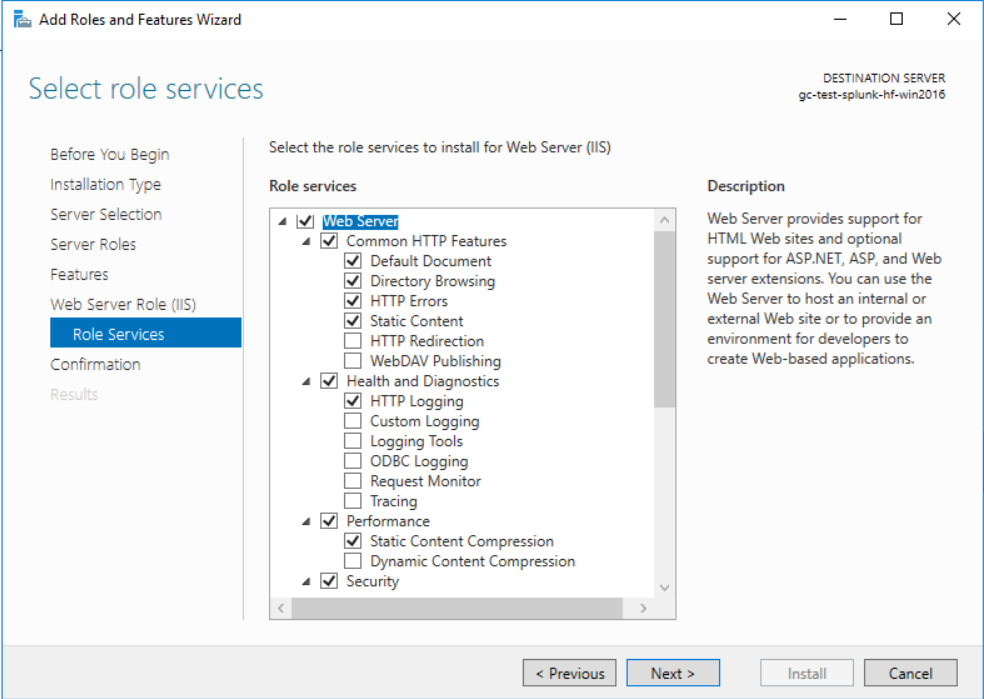

Press Next twice and select the following Web Server Role Service options

Ensure that CGI is checked under Application Development.

Enable wfastcgi in IIS by running:

"C:\Python313\Scripts\wfastcgi-enable"

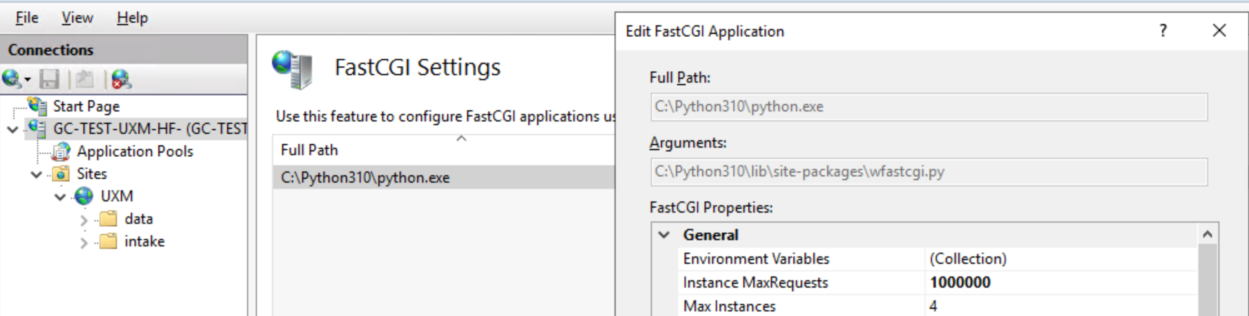

Open IIS Manager -> Server -> FastCGI Settings and edit the python item.

Set "Instance MaxRequests" to 1.000.000 and Max Instances to 4 to avoid that fastcgi recycles too often.

Setup data collection website

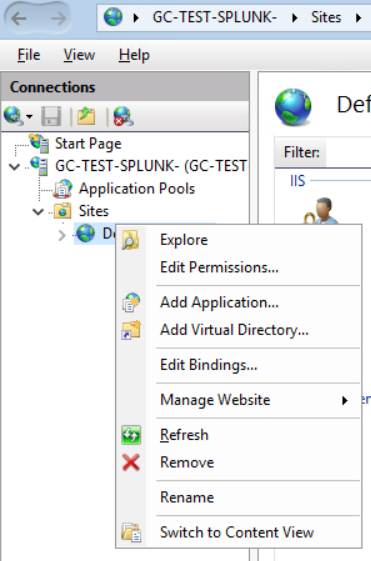

Open Internet Information Services Manager and remove the default site

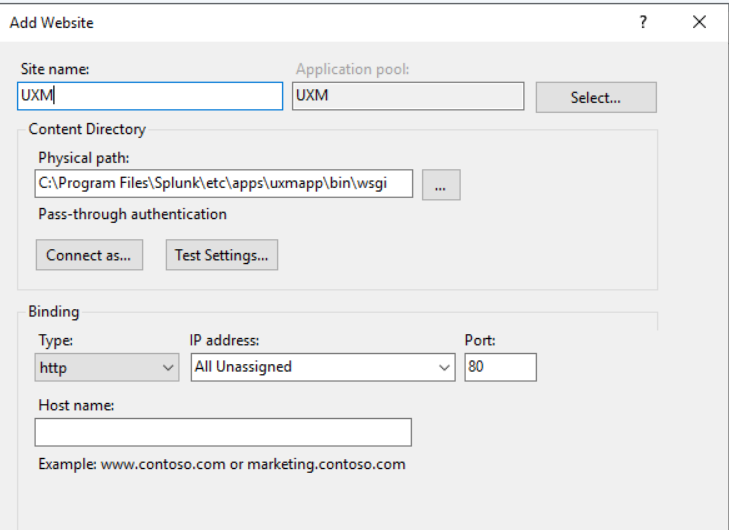

Right-click on sites and select Add Website, give it following information:

- Site name: UXM

- Application pool: UXM

- Physical path: C:\Program Files\Splunk\etc\apps\uxmapp\bin\wsgi

- Binding: Port 80

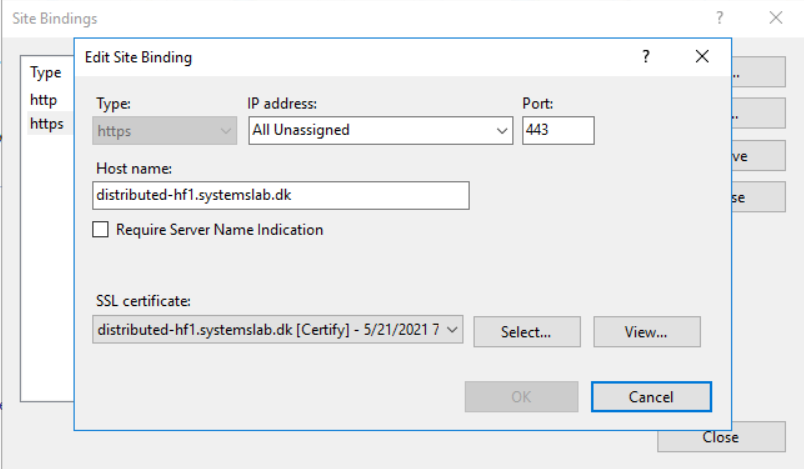

Edit bindings and add HTTP's to receive data from UXM Web Agent or UXM Browser Extensions, this step can be skipped if you are offloading the HTTPs to an external load-balancer/reverse proxy.

Configure folder permissions

IIS/Python needs to be able to access the following folders, execute the commands in an elevated cmd prompt:

icacls "C:\Program Files\Splunk\etc\apps\mcg\_uxm" /grant "IIS AppPool\UXM":(OI)(CI)(RX) /T

icacls "C:\Program Files\Splunk\etc\apps\uxmapp" /grant "IIS AppPool\UXM":(OI)(CI)(RX) /T

icacls "C:\Program Files\Splunk\etc\apps\search\lookups" /grant "IIS AppPool\UXM":(OI)(CI)(RX) /T

icacls "C:\Program Files\Splunk\share" /grant "IIS AppPool\UXM":(RX) /T

icacls "C:\Program Files\Splunk\etc\auth\splunk.secret" /grant "IIS AppPool\UXM":(R)

icacls "C:\Program Files\Splunk\var\log" /grant "IIS AppPool\UXM":(OI)(CI)(R,W,M) /T

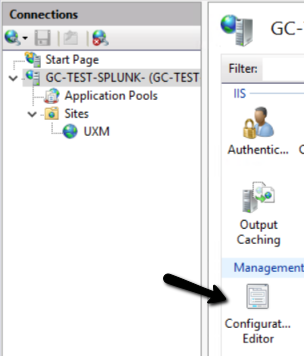

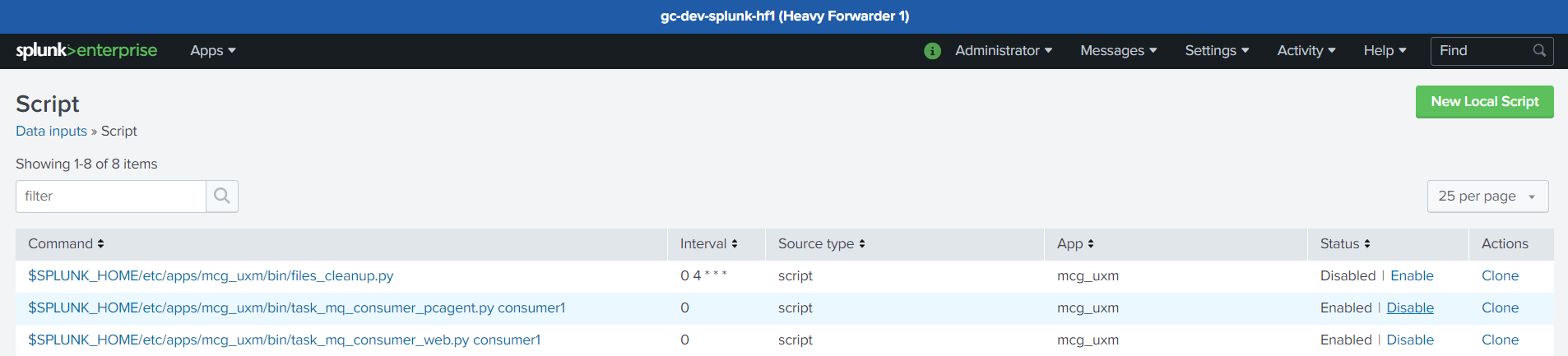

Unlock system.webServer/handlers

Open IIS Manager and go to root server and select Configuration Editor.

Select system.webServer/handlers and click "Unlock Section"

IIS can be tested by visiting FQDN/data/browser or IP/data/browser, UXM will output "no get/post data received" if IIS works correctly.

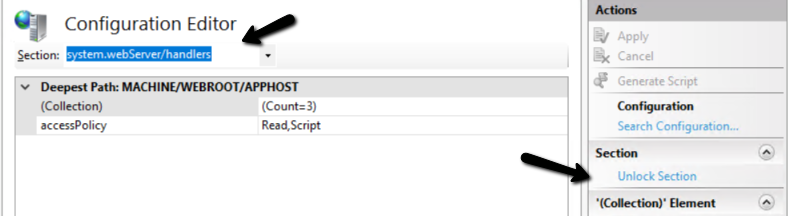

Enable Splunk Batch Processing Scripts

Enable scripts for UXM Web and UXM Desktop agent data processing if technologies are used.

Go to Settings -> Data inputs -> Script and enable:

- "task_mq_consumer_pcagent.py consumer1"

- "task_mq_consumer_web.py consumer1"

Check for Errors

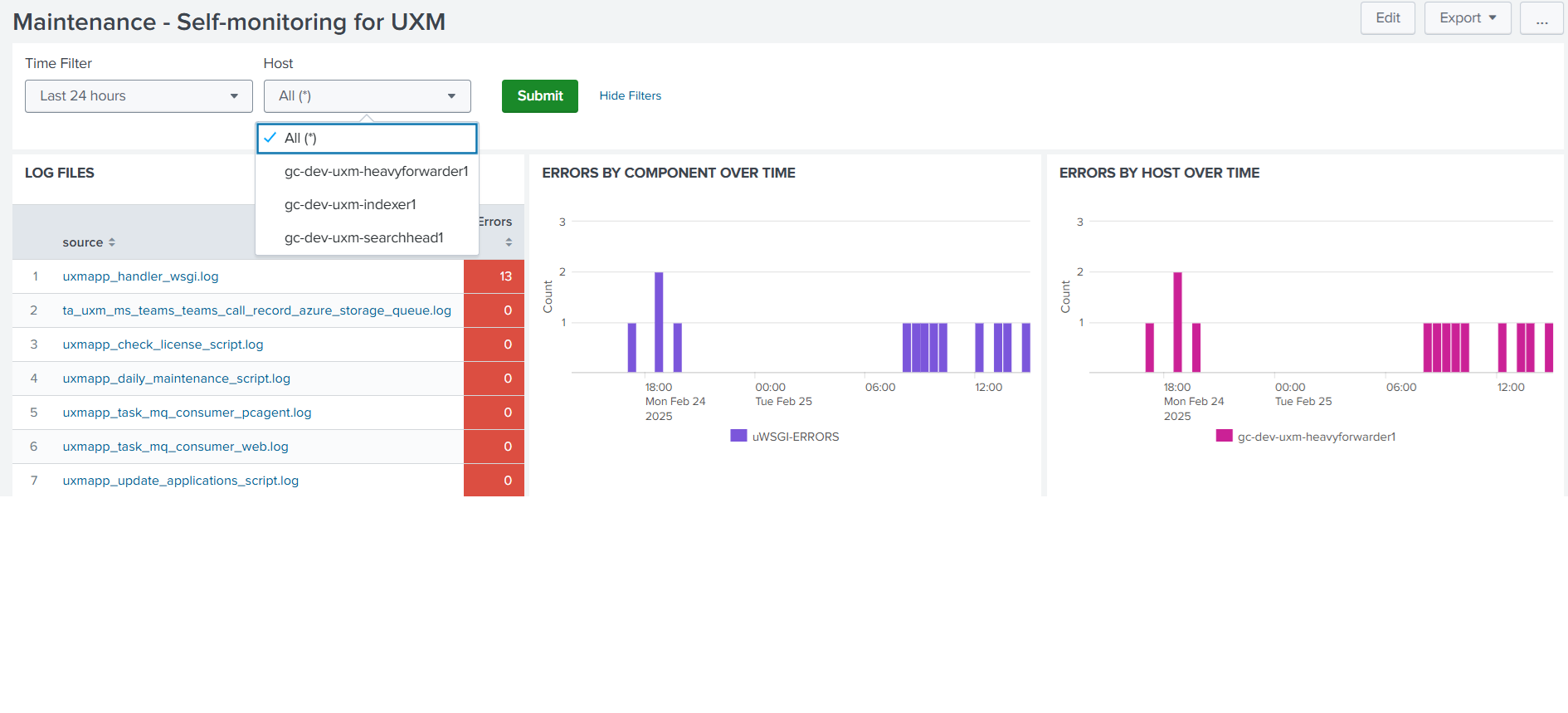

Open the UXM app on the Search Head and select Reports -> Maintenance Reports -> Maintenance - Self-monitoring for UXM, the dashboard show status on installation and report any errors detected.

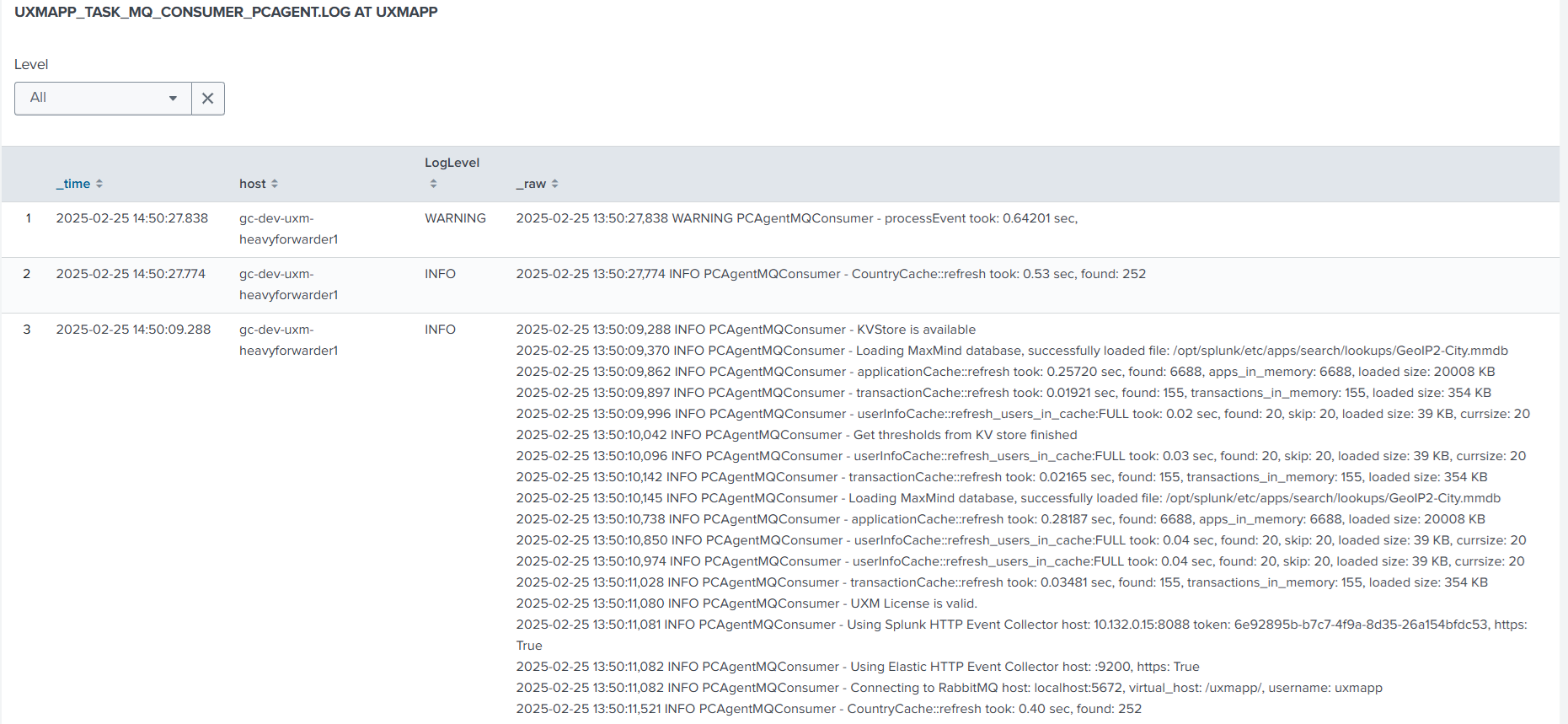

PCAgent and Web consumer will show following info if everything works: